OPC is Cclub's new architecture for Org and Personal Colocated VMs. It's built using the Xen hypervisor and libvirt management layer. This approach gives both Computer Club administrators and the VM owners the ability to directly administer and manage the VMs, striking a balance between previous approaches we've taken. In this way, the Computer Club is able to easily handle the VMs when necessary (e.g., shutting VMs down for a planned power outage or because ISO alerts us that there was a break-in), but the owners are also able to utilize the management layer to directly solve problems (e.g., rebooting a crashed VM or using console access to debug a boot problem).

Contents

Basics

As mentioned above, OPC uses libvirt to handle VM management. There are a wide variety of tools available that use libvirt's tooling. The primary command line tools is virsh. You may already be familiar with virsh if you previously had a VM on the Daemon Engine architecture. Virsh is most easily available from the physical machines hosting OPC VMs. (Note, however, that the physical machines are on CMU-internal IP addresses. To connect to one of them via ssh, you will either need to connect from the CMU campus, or go through a machine at CMU with a public IP address as an intermediary, such as a CClub shell server or the campus VPN.) The OPC physical machines are set up so that a virsh command without any --connect argument will access the local VMs.

Example Commands

Here are some example operations that you can perform. The commands will only show and work on VMs you have access to--VMs that you own, or VMs that are owned by a group you belong to. (In the example, I am able to access two VMs: the stretch-test VM owned by the cclub group and the kbare.opc-test VM that I personally own.) If you are not seeing your expected VMs on any given host, please let us know at operations@club.cc.cmu.edu.

List running VMs:

kbare@opc-hp-01:~$ virsh list Id Name State ---------------------------------------------------- 2 g:cclub:stretch-test running 3 kbare:kbare.opc-test running

Shut down a VM:

kbare@opc-hp-01:~$ virsh shutdown kbare:kbare.opc-test Domain kbare:kbare.opc-test is being shutdown

List all VMs:

kbare@opc-hp-01:~$ virsh list --all Id Name State ---------------------------------------------------- 2 g:cclub:stretch-test running - kbare:kbare.opc-test shut off

Start a stopped VM:

kbare@opc-hp-01:~$ virsh start kbare:kbare.opc-test Domain kbare:kbare.opc-test started

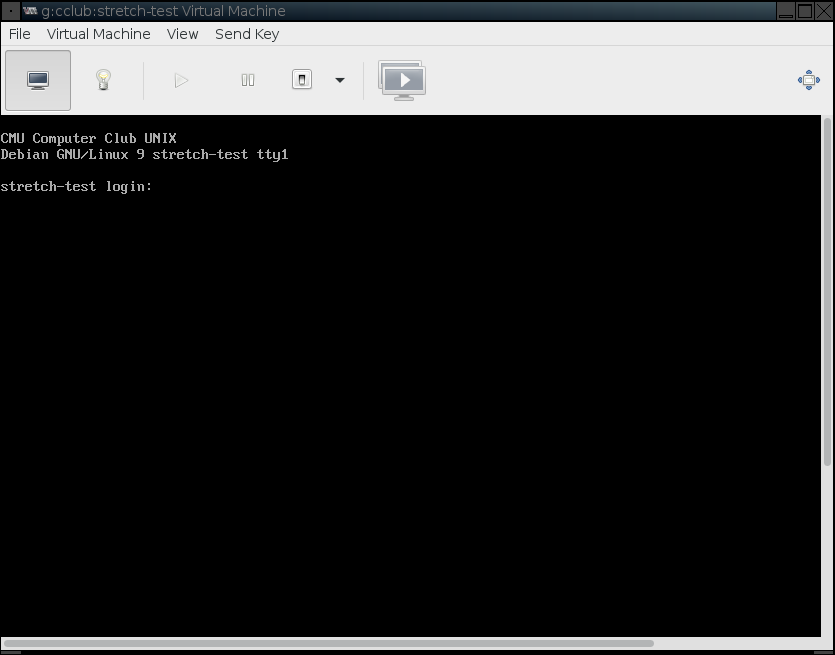

Connect to a VM's virtual serial console:

kbare@opc-hp-01:~$ virsh console g:cclub:stretch-test Connected to domain g:cclub:stretch-test Escape character is ^] CMU Computer Club UNIX Debian GNU/Linux 9 stretch-test ttyS0 stretch-test login: kbare Password: Last login: Fri Mar 9 07:41:55 UTC 2018 from 172.29.24.40 on pts/0 Linux stretch-test 4.9.0-5-amd64 #1 SMP Debian 4.9.65-3+deb9u2 (2018-01-04) x86_64 The programs included with the Debian GNU/Linux system are free software; the exact distribution terms for each program are described in the individual files in /usr/share/doc/*/copyright. Debian GNU/Linux comes with ABSOLUTELY NO WARRANTY, to the extent permitted by applicable law. No mail. kbare@stretch-test:~$ hostname stretch-test kbare@stretch-test:~$ exit logout CMU Computer Club UNIX Debian GNU/Linux 9 stretch-test ttyS0 stretch-test login:

Type the escape character, ctrl-[, to leave the console session.

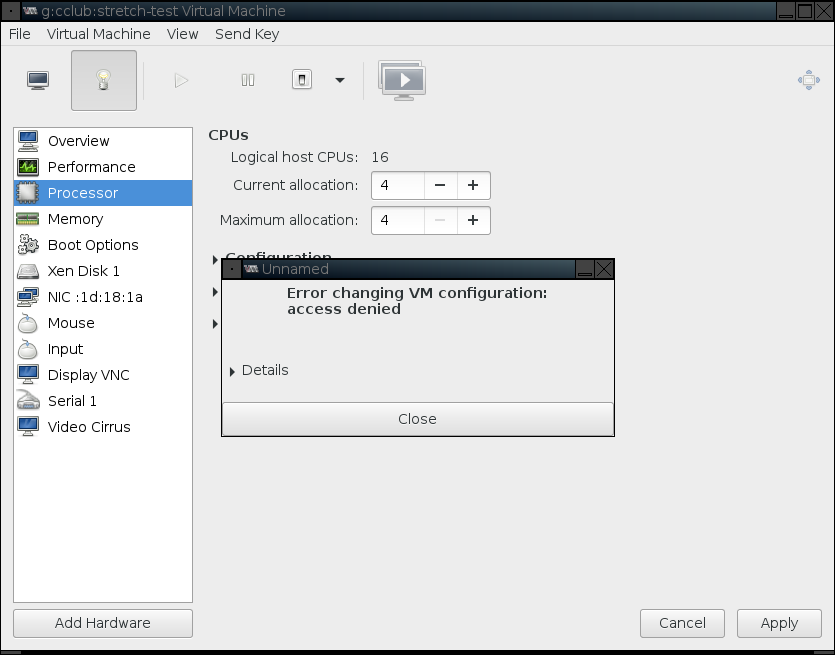

Disallowed Commands

Not all commands for managing VMs are available. As a general rule, commands that do not affect a VM's persistent configuration or resource allocation are allowed. Other commands are blocked.

E.g.,

kbare@opc-hp-01:~$ virsh setmem kbare:kbare.opc-test 20GB error: access denied

Additional References

virsh help—list of all virsh commands

virsh help domain—list of virsh commands for examining and manipulating VMs

virsh help «command»—details of a specific virsh command

man virsh

Remote Management

Libvirt supports remote management, allowing you to run virsh and other libvirt applications on your own machine in order to manage OPC VMs. For example, virt-manager is a GUI tool that supports many of the same operations as virsh. You can also run virt-viewer to connect to the graphical console of your VM.

We only support remote access via ssh tunneling, with xen+ssh:// URLs. E.g., you will need to use an option like --connect xen+ssh://opc-hp-01.club.cc.local.cmu.edu/ with the libvirt applications. Unfortunately, this requires additional configuration if you are not connecting from CMU. And, even if you are connecting from CMU, additional configuration is advisable for the smoothest operation.

SSH Configuration: Persistent Connections

Libvirt applications may need several sessions with the OPC physical machine in order to accomplish their work. This can slow things down significantly, as initial negotiations for an SSH connection require some time-consuming cryptography. For better responsiveness, we recommend setting up OpenSSH's support for shared persistent connections. To enable this for the OPC physical machines, add the following to the end of ~/.ssh/config:

Host opc-*.club.cc.local.cmu.edu ControlMaster auto ControlPath ~/.ssh/control-%r-at-%h-%p ControlPersist 15m

This instructs ssh to look for a special control socket before it attempts to connect to the OPC physical machines. If the control socket does not exist, ssh will connect normally, but will create the control socket and listen on it for commands. If the socket does exist, ssh will communicate over the socket to some existing, already-connected ssh process, telling it to open an additional channel for a new session on the target machine--which is quite fast. Moreover, the first ssh process will maintain its connection for 15 minutes after all sessions have been closed, meaning that if additional sessions are needed before the 15 minute timeout, they can also be created quickly.

SSH Configuration: Kerberos

Even if you set up persistent SSH connections, libvirt applications will still need to establish a new SSH connection if there isn't already one available. And this requires authentication, which would typically meaning typing in your Cclub password. This might be inconvenient, e.g., if you are trying to run a graphical application and SSH tries to read your password from a terminal. However, with some more configuration, you can make it so that ssh to Cclub systems authenticates via Kerberos instead of asking for a password. For this to work, you may need to install a package containing the Kerberos clients. Then add the following directives to the end of ~/.ssh/config:

Host *.club.cc.cmu.edu *.club.cc.local.cmu.edu GSSAPIAuthentication yes GSSAPIDelegateCredentials yes

Now if you get Kerberos tickets for your club account, ssh will not ask for your password when you connect to cclub machines, up until the point in time the tickets expire (by default 8 hours later). E.g.,

# Without Kerberos tickets, the ssh connection triggered by virsh prompts for a password kbare@musicality:~$ virsh -c xen+ssh://opc-hp-01.club.cc.local.cmu.edu/ list --all kbare@opc-hp-01.club.cc.local.cmu.edu's password: ^C kbare@musicality:~$ kinit kbare@CLUB.CC.CMU.EDU kbare@CLUB.CC.CMU.EDU's Password: # After obtaining Kerberos tickets, there is no password prompt. kbare@musicality:~$ virsh --connect xen+ssh://opc-hp-01.club.cc.local.cmu.edu/ list --all Id Name State ---------------------------------------------------- 2 g:cclub:stretch-test running - kbare:kbare.opc-test shut off

SSH Configuration: Access to OPC Machines from Off Campus

As mentioned, remote access to the OPC physical machines does not work by default if you are connecting from outside of CMU, since they have IP addresses that are only reachable from the CMU network. However, you can go through another Internet-accessible machine in order to reach them. OpenSSH can be configured to automatically set up and use a second SSH session as a tunnel when you request an ssh connection to any of the OPC physical machines. This is done by adding the following to the end of ~/.ssh/config:

Host opc-*.club.cc.local.cmu.edu ProxyCommand ssh -l %r -e none opc-gateway.club.cc.cmu.edu nc -w 3 %h %p

When using this configuration, it is very helpful to also configure persistent connections and Kerberos, as recommended in the previous sections. Tunneled connections can take a lot longer to establish, making persistent connections a big time saver. And Kerberos eliminates the need to type in a password both for the underlying tunnel and for the connection through it to the OPC physical machine.

Access to a VM's Graphical Console

If you install virt-viewer on your (Unix-flavored) local machine, you can use the remote management functionality to access your VM's graphical console. This works by establishing an SSH session to the OPC physical machine and connecting to a VNC server connected to your VM.

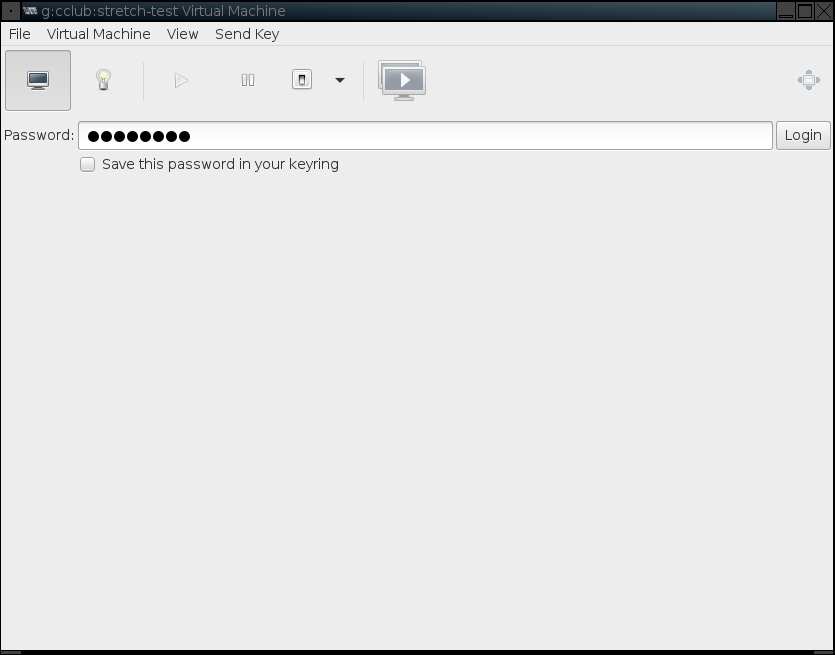

Unfortunately, this isn't quite as smooth as it might be. To prevent unauthorized users from connecting to your VM, we've set up a VNC password. It's available through libvirt, but for some reason virt-viewer is not smart enough to find and use it. Consequently, you'll need to read it yourself using virsh dumpxml <vmname> --security-info. E.g.,

kbare@musicality:~$ virsh --connect xen+ssh://opc-hp-01.club.cc.local.cmu.edu/ dumpxml g:cclub:stretch-test --security-info | grep -i vnc.*pass

<graphics type='vnc' port='-1' autoport='yes' listen='127.0.0.1' keymap='en-us' passwd='abCDefGH'>The password will be shown as the passwd attribute of the graphics XML element. In this example, it is abCDefGH. The VNC password will not change unless you ask us, so you are welcome to memorize it or put it in a password manager, so that you can avoid the dumpxml step in the future.

Then, to connect to the graphics console, run virt-viewer --connect <url> <vmname> and type the VNC password into the pop-up password dialog. The program may grab your mouse. By default, the mouse can be released by pressing the left Ctrl and Alt keys simultaneously.

For more information on virt-viewer, see:

virt-viewer --help

man virt-viewer

Graphical Management of VMs

If you're not a big fan of command line tools, virt-manager provides a GUI for much of virsh's functionality.

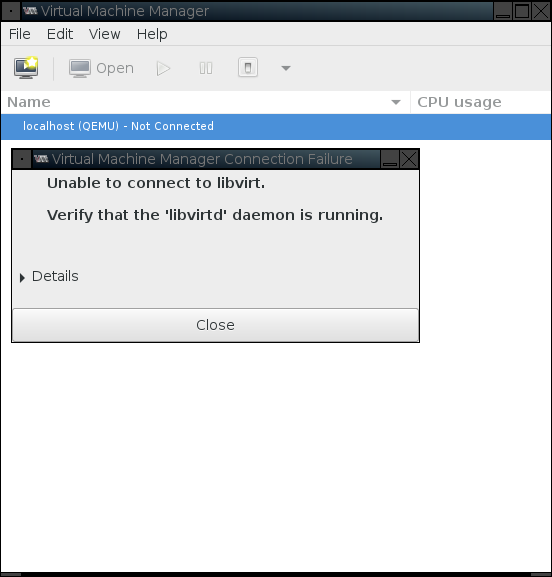

When you first start virt-manager, you'll probably see an error message similar to the following:

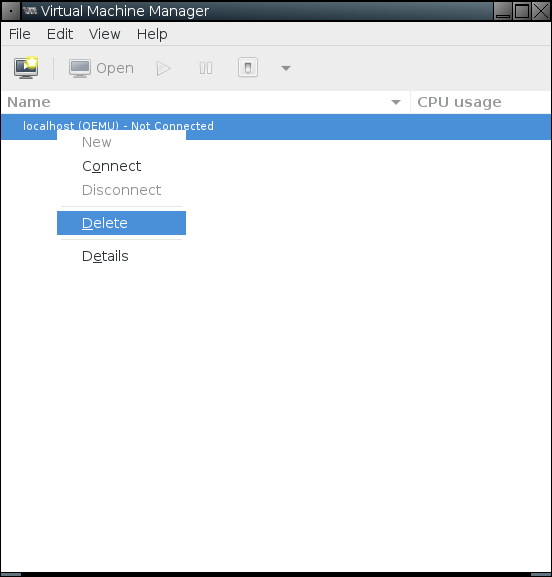

Don't worry. It's trying to connect to VMs on your local machine, and you probably don't have any. To avoid having the message pop up in the future, you can delete the connection. Right click on "localhost (QEMU)" and select "Delete" from the context menu.

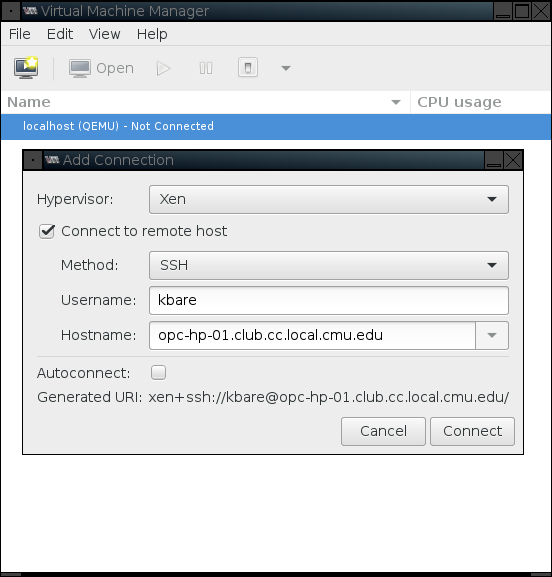

To access your OPC VMs, you'll need to tell virt-manager how to connect to the OPC physical machine hosting them. To do this, go to "File" -> "Add Connection" and fill out the dialog. Remember, we use the Xen hypervisor and SSH for connections. For example:

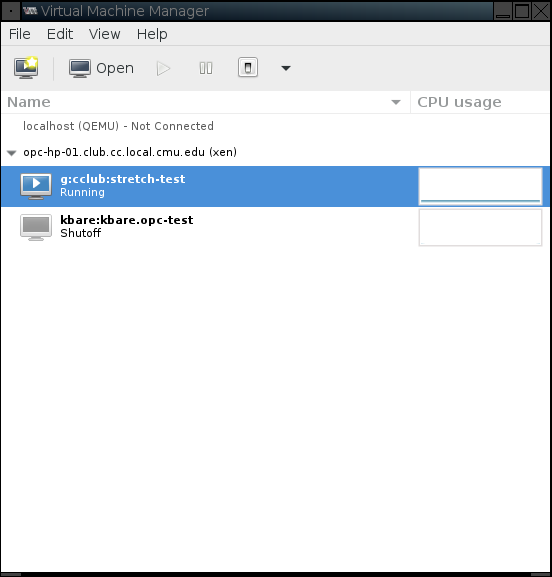

After the connection completes, virt-manager will show the connection and list of your VMs on the physical machine.

To get more details and controls to manage a specific VM, you can double click on its entry in the list. This will open a new window for that VM. By default it will show a tab for the VM's graphical console, but, as with virt-viewer, it needs you to type in the VNC password.

As with virsh, the operations you are allowed to perform are restricted. You cannot create new VMs, change a VM's persistent configuration, or change resource allocations. E.g., you cannot increase the number of virtual CPUs allocated to your VM.

For more information on virt-manager, see:

RedHat's Documentation: Managing Guests with the Virtual Machine Manager (virt-manager)

Storage Management

In some cases it may be necessary or desirable to manipulate storage associated with VMs. The key components of the OPC architecture that make this possible are the Linux Logical Volume Manager (LVM), libvirt's storage pools layer, and libguestfs.

Accessing a Stopped VM's Files

In some cases, it may be useful to read, or even modify, the files inside a VM from the OPC physical machine it runs on. Perhaps a security incident needs to be investigated without powering the VM on. Or maybe a problem is preventing the VM from booting correctly. libguestfs is able to provide access to the files within a VM, helping with these sorts of situations. However, please remember that external read-write accesses to the VMs files are not safe when the VM is running. External read-only access will not cause serious problems if the VM is running, but may encounter odd behavior and inconsistencies during the accesses. For read-only access, it is better to snapshot, stop, or pause the VM.

In any case, you can make the VM's filesystems available from the OPC physical via the guestmount command. The command takes the form guestmount -d <vmname> -i [--ro|--rw] <mountpoint>. E.g.,

kbare@opc-hp-01:~$ virsh list --state-shutoff Id Name State ---------------------------------------------------- - kbare:kbare.opc-test shut off kbare@opc-hp-01:~$ mkdir -p /run/user/$UID/kbare.opc-test kbare@opc-hp-01:~$ guestmount -d kbare:kbare.opc-test -i --rw /run/user/$UID/kbare.opc-test

Please be patient when running guestmount—the command may take tens of seconds to return.

At this point, all the VM's files are available for manipulation at the specified mount point.

kbare@opc-hp-01:~$ ls /run/user/$UID/kbare.opc-test bin etc initrd.img.old lost+found opt run sys var boot home lib media proc sbin tmp vmlinuz dev initrd.img lib64 mnt root srv usr vmlinuz.old

And, since we used a read-write mount, we can fix an issue in grub.cfg that prevents the VM from booting.

kbare@opc-hp-01:~$ vim /run/user/$UID/kbare.opc-test/boot/grub/grub.cfg

Finally, before restarting the VM, we need to unmount the VM's filesystem from the physical machine.

kbare@opc-hp-01:~$ guestunmount /run/user/$UID/kbare.opc-test

The guestmount and guestunmount commands are just two of several tools provided by libguestfs. For more information, see:

man guestmount

man guestfish

Creating, Mounting, and Deleting Snapshots of a VM's Storage

Every owner of OPC VMs is provided with a LVM volume group that stores the disk images for the owner's VMs, which is then integrated into libvirt's storage pools layer. This allows you to manipulate the volume group and the logical volumes it contains via virsh commands. E.g., to list the storage pools you can access, list the volumes they contain, and examine a volume:

kbare@opc-hp-01:~$ virsh pool-list Name State Autostart ------------------------------------------- g:cclub active yes kbare active yes kbare@opc-hp-01:~$ virsh vol-list kbare Name Path ------------------------------------------------------------------------------ kbare.opc-test /dev/opc-vg.kbare/kbare.opc-test kbare@opc-hp-01:~$ virsh vol-info kbare.opc-test --pool kbare Name: kbare.opc-test Type: block Capacity: 6.00 GiB Allocation: 6.00 GiB

Maybe we want to backup the kbare.opc-test VM's files. We mentioned that we can use guestmount to access its filesystems when the VM is stopped or paused. However, that might be too disruptive, especially if there's a lot of data to copy. Instead, we can use LVM to create a copy-on-write snapshot. This presents an unchanging view of the VM's filesystem that would not be subject to oddities that would occur if we mounted the live version of the filesystem in the guest. A snapshot can be taken with virsh vol-create-as <pool> <snapname> <snapsize> --backing-vol <originname>. E.g.,

kbare@opc-hp-01:~$ virsh vol-create-as kbare kbare.opc-test.snapshot 2GiB --backing-vol kbare.opc-test Vol kbare.opc-test.snapshot created kbare@opc-hp-01:~$ virsh vol-info kbare.opc-test.snapshot --pool kbare Name: kbare.opc-test.snapshot Type: block Capacity: 6.00 GiB Allocation: 2.00 GiB

The snapshot will only store data that is overwritten in the origin logical volume. Hence, the snapshot can be sized smaller than the origin. Note that you can see how much space is free in a storage pool with virsh pool-info <pool>. E.g.,

kbare@opc-hp-01:~$ virsh pool-info kbare Name: kbare UUID: 17cca8f4-8200-4acc-bcc4-5afc8e321643 State: running Persistent: yes Autostart: yes Capacity: 10.00 GiB Allocation: 8.00 GiB Available: 2.00 GiB

We create storage pools with 15–25% of the space unallocated, to leave room to create copy-on-write snapshots like this.

As with a halted VM, guestmount is used to mount the filesystem on the OPC physical machine. However, a different syntax is required to have it access the snapshot. Namely, guestmount --format=raw -a <snappath> -i --ro <mountpoint>. The snapshot path can be determined by virsh vol-list. E.g.,

kbare@opc-hp-01:~$ virsh vol-list kbare Name Path ------------------------------------------------------------------------------ kbare.opc-test /dev/opc-vg.kbare/kbare.opc-test kbare.opc-test.snapshot /dev/opc-vg.kbare/kbare.opc-test.snapshot kbare@opc-hp-01:~$ mkdir -p /run/user/$UID/kbare.opc-test kbare@opc-hp-01:~$ guestmount --format=raw -a /dev/opc-vg.kbare/kbare.opc-test.snapshot -i --ro /run/user/$UID/kbare.opc-test

At this point, the important data can be backed-up. E.g.,

kbare@opc-hp-01:~$ tar -zcf ~/web-data.tar.gz -C /run/user/$UID/kbare.opc-test/var/www .

And then the VM's filesystem should be unmounted, and the snapshot deleted.

kbare@opc-hp-01:~$ guestunmount /run/user/$UID/kbare.opc-test kbare@opc-hp-01:~$ virsh vol-delete kbare.opc-test.snapshot --pool kbare Vol kbare.opc-test.snapshot deleted

Please be aware that it is best not to leave snapshots around indefinitely. The overhead of copying data before it is overwritten can decrease your VM's performance. It's also possible that the snapshot can run out of space—which will make the snapshot invalid and no longer usable.

For more information, see:

virsh help pool—list of virsh commands for examining and manipulating storage pools

virsh help volume—list of virsh commands for examining and manipulating storage volumes

virsh help «command»—details of a specific virsh command

man virsh